关键词 > COSC2527/2528

COSC2527/2528 Games and Artificial Intelligence Techniques Assignment 2

发布时间:2024-05-05

Hello, dear friend, you can consult us at any time if you have any questions, add WeChat: daixieit

Games and Artificial Intelligence Techniques - COSC2527/2528

Assignment 2

|

Assessment Type |

Individual assignment. Submit online via GitHub Classroom. The last commit prior to the assignment deadline will be graded. Marks awarded for meeting requirements as closely as possible. Clarifications/updates may be made via announcements/relevant discussion forums. |

|

Due Date |

Friday 3rd May, 2024, 11:59pm |

|

Marks |

30. |

1 Overview

The focus of this assignment is on search algorithms, where an agent simulates possible future trajectories in order to calculate a plan. We will consider two specific algorithms: A* and Monte Carlo Tree Search (MCTS). The A* algorithm strives to find the cheapest route to a goal by simulating various paths, while the MCTS algorithm is designed for turn-based games, evaluating game states in the search tree via random rollouts.

The first part of the assignment requires you to demonstrate your understanding of A* by adapting an existing implementation into the Assignment 1 project and extending it in various ways. In the second part of the assignment, you will build an MCTS agent for the strategy game,Connect 4.

Starter code for the assignment is provided on GitHub Classroom; see Canvas for instructions on how to create your repo.

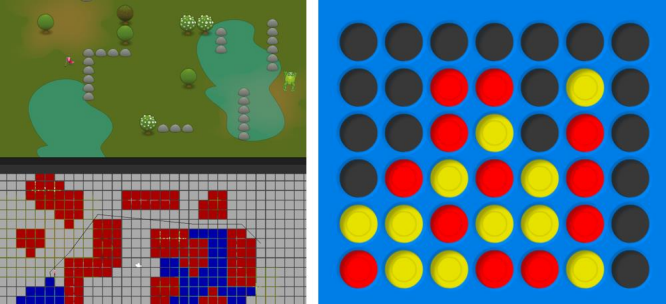

Figure 1: Assignment 2 focuses on A* pathfinding (right) and MCTS applied to Connect 4 (right).

2 Learning Outcomes

This assessment relates to the following learning outcomes:

• [CLO1]: Apply various AI techniques and tools in the context of games programming.

• [CLO2]: Design and develop a gaming application, based on existing games engines or platforms.

3 Specification

The requirements for the two parts of the assignment are detailed below.

Part 1: A* Pathfinding (15 marks)

For Part 1, refer to the Pathfinding scene in the starter code. A pathfinding implementation based onSebastian Lague’s A* tutorialhas been roughly incorporated into the project, but it is lacking in various ways. You should begin by studying the Pathfinding game object and the associated code in Scripts/Pathfinding to understand how it works. Note that the frog doesn’t actually use pathfinding yet -- it's still configured to move directly towards the right-clicked location via Arrive.

Make the frog follow the A* path (4 marks)

When the player right-clicks a location, the path variable in Scripts/Frog.cs gets set to an array of nodes corresponding to the shortest path from the frog to the target location. This path is sketched as a black line via Debug.Drawline() in FixedUpdate(). For this task, you’re required to update the movement logic in FixedUpdate() so that the frog follows the A* path, rather than moving directly towards the clicked position.

Notes:

• You’ll need to think about when the frog should move on to the next waypoint in the path. One solution is to continually refresh the path (this is Sebastian Lague’s approach),but this entails a needlessly high computational burden that you should avoid.

• You should aim to make the path following look as natural as possible. The frog should still move via steering behaviours, and you think carefully about which steering behaviours to use. The frog shouldn’t collide with corners very often (see Michael’s video on the Assignment 2 Canvas page for expected performance).

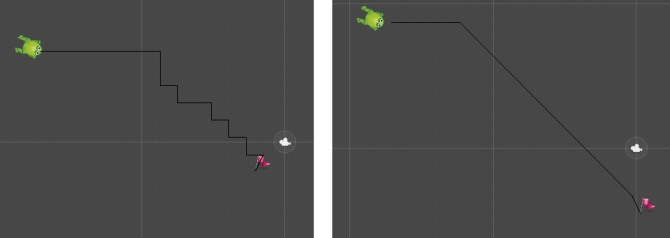

Update the A* algorithm to allow diagonal neighbours (4 marks)

The A* code provided considers only cardinal movements (up, down, left, right) when determining the neighbours of anode -- see GetNeighbours() in Scripts/Pathfinding/AStarGrid.cs. This yields very clunky-looking paths; see Figure 2 (left) for an illustration. Your task is to update the algorithm to treat diagonal nodes as neighbours too, which should yield more natural-looking paths.

Aside from modifying GetNeighbours(), you should think about which other parts of the algorithm need to be updated. The algorithm should still be guaranteed to return the shortest path (i.e., the length of the black line drawn should be as short as possible, given the allowed movements) and you should consider the issues raised towards the end of Week 5’s class.

It should be possible to enable/disable diagonal neighbours by toggling the variable includeDiagonalNeighbours, which is defined in AStarGrid.cs.

Figure 2: Examples of the types of paths found with diagonal neighbours disabled (left) and enabled (right).

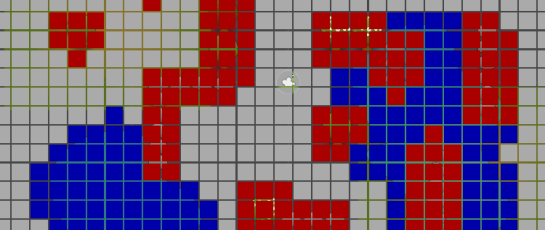

Implement water cost (3 marks)

The game scene for Assignment 2 has been updated to include lakes that slow the frog down.

However, the provided pathfinding implementation makes no distinction between dry land and water. For this task, you’re required to modify the algorithm so that it treats moving through water as being more costly than moving across dry land.

The extra cost of moving through water is defined by the variable waterCostMultiplier in Scripts/Pathfinding/Pathfinding.cs. Its default value is 3, which means that moving to a water node should be considered as 3 times more costly than moving to a dry land node. (Note that the cost of moving to a neighbour should be determined by the node moved to, not the node moved from.)

To tackle this problem, you’ll first need to determine which nodes are in the water. You should follow a similar approach to how the existing code determines whether anode is walkable or not. You should also update the gizmos to indicate which nodes are in the water (see Figure 3).

Figure 3: Illustration of the how the gizmos should look after updating to display water nodes. Red = unwalkable, blue = water node, grey = dry land.

If implemented correctly, the frog should sometimes favour a long path on dry land over a shorter path that goes through water. See Michael’s video on the Assignment 2 page for an indication of expected behaviour.

Implement path smoothing (4 marks)

While including diagonal neighbours yields smoother trajectories, the paths still don’t look completely natural. For example, on the right side of Figure 2, a rational character would simply walk directly towards the flag if the path was unobstructed. This is not possible if the character is restricted to up, down, left, right and 45o movements. To address this, you’re required to implement path smoothing, as outlined in the Week 5 slides.

Your code should be placed inside the SmoothPath() method in Scripts/Pathfinding/Pathfinding.cs, which is intended to take anunsmoothed path as input and return a smoothed path. To achieve full marks, your implementation should simplify paths where possible, but it should not cause the frog to bump into corners or traverse through water nodes that the unsmoothed path avoids.

Part 2: MCTS for Connect4 (15 marks)

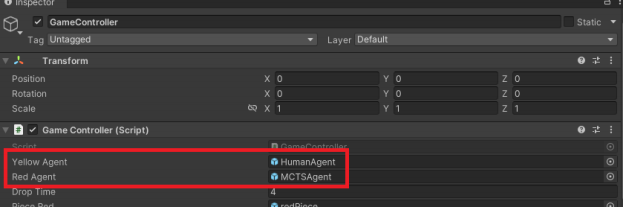

For this part of the assignment, see the Connect4 scene in the starter project.

The game has been setup so that red and yellow sides can be played by either humans or AI agents. To see how this is configured, click on the GameController object, and note that the red and yellow agents are defined by prefabs:

Figure 4: Agent configuration for Connect 4.

There are four prefabs already setup in Prefabs/Agents:

• HumanAgent: Self-explanatory.

• RandomAgent: An agent that randomly selects which column to play in.

• MonteCarloAgent: You are required to implement this agent, per the spec below. Code should be placed in Scripts/Connect4/MonteCarloAgent.cs.

• MCTSAgent: You are required to implement this agent, per the spec below. Code should be placed in Scripts/Connect4/MCTSAgent.cs.

Implement a basic Monte Carlo agent (4 marks)

For this task, you’re required to implement the basic Monte Carlo agent described in the Week 6 slides. The agent should evaluate each of the available moves by running random simulations from the subsequent states and averaging the results.

Notes:

• The only method you should modify for this agent is GetMove().

• The total number of simulations to run is defined by the variable totalSims. This should be split evenly between the available actions, i.e., if totalSims = 2500 and there are 5 possible columns to play in, each action should be evaluated using 2500 ÷ 5 = 500 simulations.

• Before simulating, you will need to create a copy of the current state so that the simulations don’t affect the real game state. You can use Clone() in Scripts/Connect4/Connect4State.cs.

• You may also find the argMin() and argMax() methods in Scripts/Connect4/Agent.cs helpful. These return the index of the minimum and maximum elements in an array of floats, respectively.

If implemented correctly, you should find that this agent is reasonably difficult to play against (with the default totalSims value of 2500). It generally plays aggressively and often sets up 3-in-a-row, because setting up immediate threats often leads to victory in random simulations. I (Michael) can sometimes beat it, but it’s surprisingly tough!

Implement a Monte Carlo Tree Search agent (6 marks)

For this task, you’re required to implement an MCTS agent, as described in Week 6. This agent extends on the basic Monte Carlo agent above by iteratively building a search tree.

Implementing this agent intended to be challenging, and while it’s natural to look at online repos if you’re struggling (in fact, I would recommend this --just don’t look at other students’ code!), you should not attempt to copy an existing implementation into the starter project and/or overhaul the starter project completely. You can create a class for the MCTS search nodes if you wish, but besides this, the only method you should edit is GetMove().

If implemented correctly, the MCTS agent should be significantly stronger than the basic Monte Carlo agent. I ran 50 matches between my agents with their default settings (2500 simulations each, c = 0.5 for the MCTS agent) and the MCTS agent scored 45 wins, 4 losses and 1 draw.

Enhance the MCTS agent (5 marks)

This final task is intended to be more open-ended. Your objective is to create a tuned MCTS agent that outperforms the MCTS agent implemented above, without increasing its runtime.

To facilitate this, you should start by adding a configuration option to the MCTS script that allows the simulation budget to be specified in terms of milliseconds, instead of a fixed number of simulations. (Ideally, it should be possible to specify the budget in either manner.) If the simulation budget is set to, say, 1000 milliseconds, then GetMove() should run until 1000 milliseconds elapse.

Next, create a copy of the MCTS agent class and call it TunedMCTSAgent. Setup an appropriate prefab so that it is possible to play a match between the tuned agent and the original MCTS agent. You should now be setup well to experiment with enhancements.

In terms of specific enhancements to try, here are some suggestions:

• Try tuning the value of the exploration constant, c.

• Try modifying the rollout policy (the action selection mechanism used during the rollouts) to be more intelligent than uniform random moves. There are lots of things you could try here, but bear in mind that any heuristic you devise will need to be fast to compute; otherwise, it will reduce the number of simulations you can run within the time budget.

• Try adding an “opening book”, i.e., a database of strong opening moves. You could try

finding one online, or you could try running MCTS with a very high computational budget and save its evaluations to a file.

• Make it so that the agent remembers the game tree from its previous move (and culls the no longer relevant branches).

• Use multithreading to run multiple simulations in parallel and average the results when backpropagating.

• This isn’t expected, but if you’re feeling very ambitious, you could lookup papers on MCTS enhancements on Google Scholar.

This component will be marked primarily based on the correctness of your implementation and the ambitiousness of the enhancements tried. Trying only a single, basic approach such as tuning the value of c will attract only 1-2 marks. A single, sophisticated approach implemented well could attract full marks, but as a rule of thumb, you should ideally try at least two enhancements. Speak to Michael in class if you are unsure about this.

Please include a brief description of what you tried in the README file, and give some indication of the strength of your strongest agent, e.g., run several matches against the original MCTS agent and provide the score. Please include instructions on how to replicate any results so that the markers can validate them.

If you’re unable to tackle this task because you failed to implement MCTS, you can try optimising the basic Monte Carlo agent instead, although you will receive less marks for this.

I have opted against forcing students to take part in a competition, but if you are interested in seeing how your agent fares against others, let me know and I may run a side competition with a small prize if there is sufficient interest.

4 Submission

You do not need to submit anything on Canvas for this assignment; we will just grade your most recent commit on GitHub Classroom. Please note:

• As explained on Canvas, please use Unity LTS Release 2022.3.19f1. We will deduct one mark if you submit your project with a different Unity version.

• Please fill in your student number in README.md, especially if you are using anon-RMIT GitHub account.

• Late submissions will incur a penalty of 10% of the total score possible per calendar day.

• If you do submit late, please let us know so that we download the correct version of your assignment.

• Information on applying for special consideration is available here:

https://www.rmit.edu.au/students/my-course/assessment-results/special-consideration- extensions/special-consideration

5 Academic integrity and plagiarism (standard warning)

Academic integrity is about honest presentation of your academic work. It means acknowledging the work of others while developing your own insights, knowledge and ideas. You should take extreme care that you have:

• Acknowledged words, data, diagrams, models, frameworks and/or ideas of others you have quoted (i.e. directly copied), summarised, paraphrased, discussed or mentioned in your assessment through the appropriate referencing methods.

• Provided a reference list of the publication details so your reader can locate the source if

necessary. This includes material taken from Internet sites. If you do not acknowledge the sources of your material, you maybe accused of plagiarism because you have passed off the work and ideas of another person without appropriate referencing, as if they were your own.

RMIT University treats plagiarism as a very serious offence constituting misconduct. Plagiarism covers a variety of inappropriate behaviours, including:

• Failure to properly document a source.

• Copyright material from the internet or databases.

• Collusion between students.

For further information on our policies and procedures, please refer to the following:

https://www.rmit.edu.au/students/student-essentials/rights-and-responsibilities/academic-integrity.

We will run code similarity checks.

6 Marking Guide

The rubric used for marking will be provided on Canvas at the bottom of the assignment page.