DTS304TC Machine Learning Assessment Task 2

Hello, dear friend, you can consult us at any time if you have any questions, add WeChat: daixieit

DTS304TC Machine Learning

Coursework - Assessment Task 2

Submission deadline: TBD

Percentage in final mark: 50%

Learning outcomes assessed: C, D

![]() Learning outcome C: apply ML algorithms for specific problems.

Learning outcome C: apply ML algorithms for specific problems.

![]() Learning outcome D: Demonstrate proficiency in identifying and customizing aspects on ML algorithms to meet particular needs.

Learning outcome D: Demonstrate proficiency in identifying and customizing aspects on ML algorithms to meet particular needs.

Individual/Group: Individual

Length: This assessment comprises a guided coding and experimentation project, along with an associated research report.

Late policy: 5% of the total marks available for the assessment shall be deducted from the assessment mark for each working day after the submission date, up to a maximum of five working days

Risks:

. Please read the coursework instructions and requirements carefully. Not following these instructions and requirements may result in loss of marks.

. The formal procedure for submitting coursework at XJTLU is strictly followed. Submission link on Learning Mall will be provided in due course. The submission timestamp on Learning Mall will be used to check late submission.

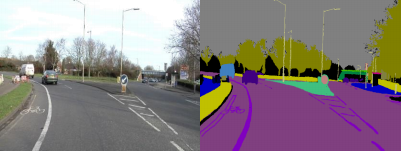

Guided Project: Street-view Semantic Segmentation with U-Net

In this project, you will evaluate, fine-tune, and perform unsupervised domain adaptation of a pretrained U-Net model for street-view semantic segmentation. You will encounter some challenges associated with street-view segmentation, such as segmenting objects in diverse driving scenes and classifying each pixel into 14 categories (sky, road, building, car and etc). The given pretrained model is trained on bright sunny images, which may not perform as well on darker cloudy images. Your task is to fine-tune this model on such images. Furthermore, you will explore unsupervised domain adaptation, assuming no ground truth is available for cloudy images due to the high cost of segmentation annotation. We will employ two methods: (1) pseudo ground truth generation, and (2) adapted batch normalization, to enhance the model's performance on cloudy image set.

The assessment consists of two segments: (1) coding and experimentation, and (2) the composition of a research report. As you progress through the coding and experimentation phase, it is important to record and document all pertinent findings, which will be integrated into your research report.

Before starting this project, please ensure that you have downloaded the pretrained model, assessment codebase, and the example Jupyter notebook.

Notes:

1. Although a GPU-powered machine is preferable for running this project, you can efficiently complete it on a CPU-only machine, such as those provided in school labs. This is due to the small size of both the model and the fine-tuning dataset.

2. You are strongly encouraged to read the provided code and accompanying training notebook as you will be referring to this code and modifying it during the project. You are encouraged to utilize code that was covered during our Lab sessions, as well as other online resources for assistance. Please ensure that you provide proper citations and links to any external resources you employ in your work. However, the use of Generative AI for content generation (such as ChatGPT) is not permitted on all assessed coursework in this module.

Coding and Experimentation Guideline

The experiments are divided into four parts:

1) Evaluate the pretrained model on bright sunny test images (20 marks):

Finish the supplied notebook. The pretrained model and testing dataloader code are provided. You are required to implement the testing loop and evaluation metrics.

a. Testing Loop. Implement the testing loop to predict the testing images using the pretrained model.

Ensure the model is in eval mode and use the torch.no_grad() decorator for accuracy and efficiency.

b. Accuracy Metrics. Within the testing loop, calculate the global image accuracy and per-class accuracy for each image and for the overall dataset.

a) Global image accuracy is the percentage of correctly classified pixels per image.

The overall dataset global image accuracy is calculated by averaging the global image accuracy across all images in the dataset.

b) Per-class accuracy is the Intersection-Over-Union (IOU) score for each of the 14 categories per testing image,with mean-IOU being the average across these categories.

Handle categories not present in both the ground truth and predictions correctly by assigning NaNIOU scores and use numpy.nanmean function for mean-IOU calculation

The overall dataset per-class IOU scores are obtained by computing the average per-class IOU for each category across all images in the dataset.

Present the overall dataset global image accuracy and overall dataset per-class IOU scores in the report. Please observe that there may be significant variation in IOU scores across different classes. Investigate the reasons for this discrepancy and provide explanations in the report.

c. Ranking and Analysis. Rank the testing images by global image accuracy and mean-IOU. Observe that the rankings for global image accuracy and mean-IOU may differ significantly, such that the top or worst-ranked images based on global image accuracy may not be the same when ranked according to mean-IOU. Provide explanations for these differences with example images where rankings vary between the two metrics and state them in the report.

d. Analysis the challenges associated with street-view segmentation in the project report and discuss the difficulties using specific image examples.

2) Evaluate and fine-tune the pretrained model on darker cloudy dataset (12 marks):

Finish the supplied notebook. The pretrained model and testing dataloader code for cloudy dataset are provided in the notebook.

a. Evaluate Cloudy Dataset Performance. Utilize the code from Part 1 to assess the cloudy dataset's performance, which will likely be lower, indicating the need for improvement.

b. Fine-tuning. Adapt the training notebook code to fine-tune the model on the cloudy dataset, adhering to the suggested learning rate and epoch count. Monitor and plot training/validation loss to determine the best fine-tuned model. The reporter should show training/validation loss curves to demonstrate how best fine-tuned model is selected.

c. Re-evaluation. Re-evaluate the best fine-tuned model to confirm performance improvements. The report should state overall dataset global image accuracy and overall dataset per-class IOU scores before and after finetuning.

3) Unsupervised domain adaptation using pseudo-ground truth generation (18 marks):

As the creation of semantic segmentation ground truth is both time-consuming and labor-intensive, we often encounter a surplus of unlabeled images. The challenge of leveraging such unlabeled images to enhance the performance of a model—pretrained on a source domain with characteristics like bright, sunny images—when applied to a target domain containing darker, cloudy images is known as unsupervised domain adaptation. In this part, and subsequently in part (4), we will address the challenge of unsupervised domain adaptation for our cloudy image dataset under the assumption that no ground truth is available for the training set of these images. It is important to note that while ground truth data does exist for the cloudy training dataset, its use is strictly prohibited as it contradicts the purpose of this exercise. Employing ground truth in the training set, specifically within the scope of unsupervised domain adaptation, will incur substantial score penalties for both this segment and part (4).

Pseudo-ground truth generation is a technique commonly used in unsupervised domain adaptation to leverage unlabeled data in the target domain. The idea is to use our pretrained model to predict labels for the unlabeled target domain data. These predictions, despite not being as accurate as human-annotated ground truth, provide a 'good enough' estimate that can be used for further training. The process typically involves the following steps:

![]() Model Prediction: Apply the model trained on the source domain (e.g., bright, sunny images) to the target domain data (e.g., darker, cloudy images) to generate predictions. Since the model is not yet adapted to the target domain, these predictions will not be perfect and will likely contain errors.

Model Prediction: Apply the model trained on the source domain (e.g., bright, sunny images) to the target domain data (e.g., darker, cloudy images) to generate predictions. Since the model is not yet adapted to the target domain, these predictions will not be perfect and will likely contain errors.

![]() Confidence Thresholding: Evaluate the confidence of the model's predictions and retain only those that exceed a certain confidence level. The threshold can be determined empirically. This step ensures that only the predictions the model is most sure about are used in the next stage of training.

Confidence Thresholding: Evaluate the confidence of the model's predictions and retain only those that exceed a certain confidence level. The threshold can be determined empirically. This step ensures that only the predictions the model is most sure about are used in the next stage of training.

![]() Further Training: Use the pseudo-ground truths as labels to further train the model on the target domain data. This step is similar to standard supervised learning but uses generated labels instead of true annotations. The model can be fine-tuned using these labels, allowing it to gradually learn the characteristics of the target domain.

Further Training: Use the pseudo-ground truths as labels to further train the model on the target domain data. This step is similar to standard supervised learning but uses generated labels instead of true annotations. The model can be fine-tuned using these labels, allowing it to gradually learn the characteristics of the target domain.

Complete the following tasks:

a. Experimentation with Model Confidence.

Entropy serves as a conventional indicator of model confidence; a higher entropy in the probabilistic distribution output by a classifier, for instance, suggests uniformity across the scores for the 14 classes, indicating a lower level of certainty in the classification and thus a reduced confidence level. Complete the following experiments:

![]() Compute the entropy map for the segmentation result. Begin by applying the softmax activation function to the model's output to determine the probabilistic scores. Then, utilize the entropy formula to calculate the entropy map for the model.

Compute the entropy map for the segmentation result. Begin by applying the softmax activation function to the model's output to determine the probabilistic scores. Then, utilize the entropy formula to calculate the entropy map for the model.

![]() Compare this entropy map to the segmentation error map, which reflects whether the ground truth matches the predictions at each respective location.

Compare this entropy map to the segmentation error map, which reflects whether the ground truth matches the predictions at each respective location.

![]() For your selected testing images, show both segmentation error map and entropy map examples to demonstrate any findings you can find. Present the images and findings in your report.

For your selected testing images, show both segmentation error map and entropy map examples to demonstrate any findings you can find. Present the images and findings in your report.

![]() Additionally, explore the relationship between model entropy and segmentation error. Flatten the entire entropy map, concatenate the entropy values from across all dataset images, and categorize the pixels into quartiles based on their entropy levels: top 25%, 25-50%, 50-75%, and 75- 100%. In the report, calculate the average segmentation error within these intervals to uncover any patterns or insights.

Additionally, explore the relationship between model entropy and segmentation error. Flatten the entire entropy map, concatenate the entropy values from across all dataset images, and categorize the pixels into quartiles based on their entropy levels: top 25%, 25-50%, 50-75%, and 75- 100%. In the report, calculate the average segmentation error within these intervals to uncover any patterns or insights.

b. Fine-tuning with Pseudo-ground Truth. A notebook has been provided for your use in the domain adaptation process, specifically for fine-tuning the pretrained model to perform well on a cloudy dataset, using the technique of pseudo-ground truth generation. Within this notebook, there are incomplete segments of code related to the loss calculation that you will need to address. Carefully review the code to ensure that after the application of the confidence threshold, the tensor shapes and pixel positions remain correct and conform to the PyTorch requirements for cross-entropy loss (you may also choose to implement a custom loss function if preferred). The fine-tuning should be carried out for 1-5 epochs with a low learning rate, as indicated in the notebook. To maintain simplicity, you can skip the validation step during the fine-tuning phase.

c. Performance Evaluation. Once you've completed the fine-tuning using pseudo-ground truth, evaluate the model's accuracy on the cloudy test images to ascertain any performance improvements. State this evaluation in your report.

4) Unsupervised domain adaptation using adaptive batch normalization (AdaBN) (10 marks):

Our pretrained UNet model, which includes batch normalization layers and was initially trained using bright, sunny images, will undergo an adaptation process through Adaptive Batch Normalization

(AdaBN) to better perform on a target domain featuring dark, cloudy images. The adaptation process is outlined as follows:

![]() Model Preparation: Adjust the model in the training mode to allow the batch normalization layers to update their running statistics, and at the sametime ensure the pretrained convolution weights remain untouched.

Model Preparation: Adjust the model in the training mode to allow the batch normalization layers to update their running statistics, and at the sametime ensure the pretrained convolution weights remain untouched.

![]() Statistics Update: Conduct forward passes with the target domain data, specifically using our cloudy training dataloader, through the UNet model. This step aimstorecalibrate the batch normalization layers by modifying their running mean and variance to align with the target domain's data distribution.

Statistics Update: Conduct forward passes with the target domain data, specifically using our cloudy training dataloader, through the UNet model. This step aimstorecalibrate the batch normalization layers by modifying their running mean and variance to align with the target domain's data distribution.

![]() Switch to Inference: Once the batch normalization layers have been updated to reflect the target domain statistics, transition the model to inference mode.

Switch to Inference: Once the batch normalization layers have been updated to reflect the target domain statistics, transition the model to inference mode.

For more details refer to the paper Li, Y., Wang,N., Shi, J., Liu,J., & Hou,X. (2016). Revisiting batch normalization for practical domain adaptation. arXiv preprint arXiv:1603.04779.

Complete the following tasks:

a. Implement AdaBN. Implement AdaBN to adapt the pretrained UNet model to the dataset of cloudy images. Briefly explain how you implement AdaBN in the report.

b. Model Performance. Assess the adapted model's performance by evaluating its accuracy on cloudy test images. State this evaluation in your report.

Report Guideline (40 Marks)

As part of your assessment for the topic of Street-view Semantic Segmentation with U-Net, you are required to compose a detailed report that discuss the scope of the project, the methodologies employed, the results from your experiments, and a critical analysis of these findings. Additionally, your report should contemplate future research directions that could stem from your work. It is important that you refrain from using ChatGPTfor writing your report. Your report should be structured as follows:

|

Section |

Content |

Weighting |

|

Introduction |

|

10% |

|

Methodology |

|

20% |

|

|

|

|

|

Results |

cloudy dataset, including training/validation loss curves and any improvements observed.

related to the model segmentation error, using examples and interval statistics as instructed.

techniques (pseudo-ground truth generation and adaptive batch normalization) on the model's performance. |

40% |

|

Discussions |

|

25% |

|

Conclusions and References |

|

5% |

Writing and Submission Guidelines

![]() Use a clear and concise academic writing style, with technical terms explained for the reader.

Use a clear and concise academic writing style, with technical terms explained for the reader.

![]() Ensure that the report is typed, spell-checked, and properly referenced.

Ensure that the report is typed, spell-checked, and properly referenced.

![]() Include any relevant images, diagrams, or tables that support your findings (not included in the word count).

Include any relevant images, diagrams, or tables that support your findings (not included in the word count).

![]() Submit your report via the designated online platform in PDF format.

Submit your report via the designated online platform in PDF format.

![]() Remember to attach all required components, including Jupyter notebooks, experimental code, and the trained model.

Remember to attach all required components, including Jupyter notebooks, experimental code, and the trained model.

![]() In Jupyter notebooks: use notebook cells to structure their work with clear headings and explanations and include comments within code cells to describe the purpose and functionality of key sections of code, which will facilitate the grading process.

In Jupyter notebooks: use notebook cells to structure their work with clear headings and explanations and include comments within code cells to describe the purpose and functionality of key sections of code, which will facilitate the grading process.

2024-03-03