CSE 250A. Assignment 2

Hello, dear friend, you can consult us at any time if you have any questions, add WeChat: daixieit

CSE 250A. Assignment 2

2.1 Probabilistic inference

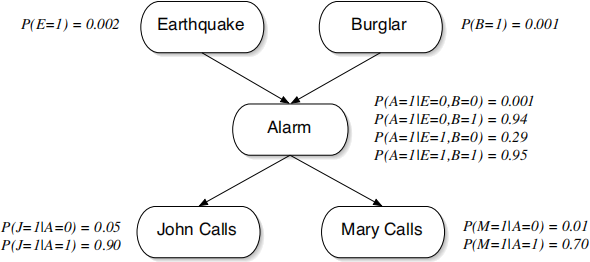

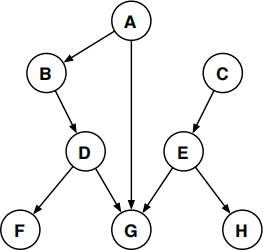

Recall the alarm belief network described in class. The directed acyclic graph (DAG) and conditional probability tables (CPTs) are shown below:

Compute numeric values for the following probabilities, exploiting relations of marginal and conditional independence as much as possible to simplify your calculations. You may re-use numerical results from lecture, but otherwise show your work. Be careful not to drop significant digits in your answer.

(a) P(E = 1|A= 1) (c) P(A= 1|M = 1) (e) P(A= 1|M = 0)

(b) P(E = 1|A= 1, B = 0) (d) P(A= 1|M = 1, J = 0) (f) P(A= 1|M = 0, B = 1)

Consider your results in (b) versus (a), (d) versus (c), and (f) versus (e). Do they seem consistent with commonsense patterns of reasoning?

2.2 Probabilistic reasoning

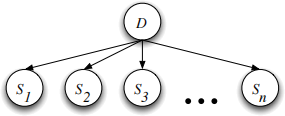

A patient is known to have contracted a rare disease which comes in two forms, represented by the values of a binary random variable D ∈ {0, 1}. Symptoms of the disease are represented by the binary random variables Sk ∈ {0, 1}, and knowledge of the disease is summarized by the belief network:

The conditional probability tables (CPTs) for this belief network are as follows. In the absence of evidence, both forms of the disease are equally likely, with prior probabilities:

In one form of the disease (D = 0), the first symptom occurs with probability one,

P(S1 = 1|D = 0) = 1,

while the kth symptom (with k≥2) occurs with probability

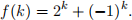

where the function f(k) is defined by

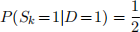

By contrast, in the other form of the disease (D = 1), all the symptoms are uniformly likely to be observed, with

for all k. Suppose that on the k th day of the month, a test is done to determine whether the patient is exhibiting the kth symptom, and that each such test returns a positive result. Thus, on the k th day, the doctor observes the patient with symptoms {S1 = 1, S2 = 1, . . . , Sk = 1}. Based on the cumulative evidence, the doctor makes a new diagnosis each day by computing the ratio:

If this ratio is greater than 1, the doctor diagnoses the patient with the D = 0 form of the disease; otherwise, with the D = 1 form.

(a) Compute the ratio rk as a function of k. How does the doctor’s diagnosis depend on the day of the month? Show your work.

(b) Does the diagnosis become more or less certain as more symptoms are observed? Explain.

2.3 Sigmoid function

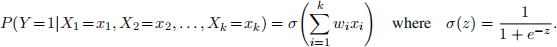

Let Y ∈ {0, 1} denote a binary random variable that depends on k other random variables Xi as:

The real-valued parameters wi in this CPT are known as weights. The so-called sigmoid function σ(z) arises in many contexts. In neural networks, it models the probability that a unit Y fires given its input from other unit Xi ; the weights wi describe the connections between units. In statistics, the sigmoid function appears in models of logistic regression. Sketch the function σ(z), and verify the following properties:

2.4 Conditional independence

Consider the DAG shown below, describing the following domain. Given the month of the year, there is some probability of rain, and also some probability that the sprinkler is turned on. Either of these events leads to some probability that a puddle forms on the sidewalk, which in turn leads to some proba-bility that someone has a fall.

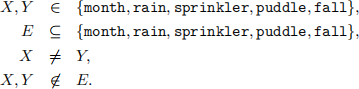

List all the conditional independence relations that must hold in any probability distribution represented by this DAG. More specifically, list all tuples {X, Y, E} such that P(X, Y |E) = P(X|E)P(Y |E), where

Hint: There are sixteen such tuples, not counting those that are equivalent up to exchange of X and Y . Do any of the tuples contain the case E =∅?

2.5 Markov blanket

The Markov blanket BX of a node X in a belief network includes its parents, its children, and the parents of its children (excluding the node X itself); see the picture below.

Appealing to the three conditions for d-separation, prove that for any node Y outside the Markov blanket of X (that is, satisfying Y ̸∈ BX and Y ≠ X), we have:

P(X, Y |BX) = P(X|BX)P(Y |BX).

Hint: A complete solution will consider the five different types of paths from nodes outside the Markov blanket to the node X; the picture below illustrates these five cases (e.g., via a child of a spouse, via a parent of a spouse, etc).

2.6 True or false

For the belief network shown below, indicate whether the following statements of marginal or conditional independence are true (T) or false (F).

P(B|G, C) = P(B|G)

P(F, G|D) = P(F|D) P(G|D)

P(A, C) = P(A) P(C)

P(D|B, F, G) = P(D|B, F, G, A)

P(F, H) = P(F) P(H)

P(D, E|F, H) = P(D|F) P(E|H)

P(F, C|G) = P(F|G) P(C|G)

P(D, E, G) = P(D) P(E) P(G|D, E)

P(H|C) = P(H|A, B, C, D, F)

P(H|A, C) = P(H|A, C, G)

2.7 Subsets

For the belief network shown above, consider the following statements of conditional independence. Indicate the largest subset of nodes S ⊂ {A, B, C, D, E, F} for which each statement is true. Note that one possible answer is the empty set S =∅ or S ={} (whichever notation you prefer). The first one is done as an example.

P(A) = P(A|S) S = {B, C, E}

P(A|D) = P(A|S)

P(A|B, D) = P(A|S)

P(B|D, E) = P(B|S)

P(E) = P(E|S)

P(E|F) = P(E|S)

P(E|D, F) = P(E|S)

P(E|B, C) = P(E|S)

P(F) = P(F|S)

P(F|D) = P(F|S)

P(F|D, E) = P(F|S)

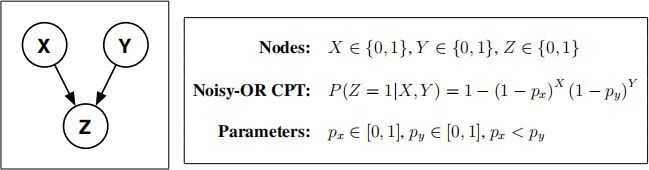

2.8 Noisy-OR

Suppose that the nodes in this network represent binary random variables and that the CPT for P(Z|X, Y ) is parameterized by a noisy-OR model, as shown above. Suppose also that

0 < P(X = 1) < 1,

0 < P(Y = 1) < 1,

while the parameters of the noisy-OR model satisfy:

0 < px < py < 1.

Consider the following pairs of probabilities. In each case, indicate whether the probability on the left is equal (=), greater than (>), or less than (<) the probability on the right. The first one has been filled in for you as an example. (You should use your intuition for these problems; you are not required to show work.)

P(X = 1) = P(X = 1)

(a) P(Z = 1|X = 0, Y = 0) P(Z = 1|X = 0, Y = 1)

(b) P(Z = 1|X = 1, Y = 0) P(Z = 1|X = 0, Y = 1)

(c) P(Z = 1|X = 1, Y = 0) P(Z = 1|X = 1, Y = 1)

(d) P(X = 1) P(X = 1|Z = 1)

(e) P(X = 1) P(X = 1|Y = 1)

(f) P(X = 1|Z = 1) P(X = 1|Y = 1, Z = 1)

(g) P(X = 1) P(Y = 1) P(Z = 1) P(X = 1, Y = 1, Z = 1)

Challenge (optional): for each case, prove rigorously the correctness of your answer.

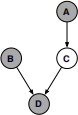

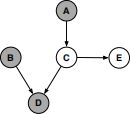

2.9 Polytree inference

Consider the belief network shown above. In this problem you will be guided through an efficient computa-tion of the posterior probability P(F|A, B, D, G). You should perform these computations efficiently—that is, by exploiting the structure of the DAG and not marginalizing over more variables than necessary.

(a) Bayes rule

Show how to compute the posterior probability P(C|A, B, D) in terms of the conditional probability tables (CPTs) for these nodes—i.e., in terms of P(A), P(B), P(C|A), and P(D|B, C). For this problem, it may help to consider just the part of the network shown below.

(b) Marginalization

Show how to compute the posterior probability P(E|A, B, D) in terms of your answer from part (a) and the CPTs of the belief network. For this problem, it may help to consider just the part of the network shown below.

(c) Marginalization

Show how to compute the posterior probability P(G|A, B, D) in terms of your answer from part (b) and the CPTs of the belief network.

(d) Explaining away

Show how to compute the posterior probability P(F|A, B, D, G) in terms of your answers from parts (b,c) and the CPTs of the belief network.

2023-12-27