CS6140 Machine Learning HW6

Hello, dear friend, you can consult us at any time if you have any questions, add WeChat: daixieit

CS6140 Machine Learning

HW6 - SVM and Kernels

PROBLEM 1 SVM library [40 points]

A) Run an SVM from a package or library of your choice on the Spambase dataset. Try

several kernels, including the polynomial and the RBF ones. Report the results. Use one of these packages: SVMlight, SGDSVM, osu SVM, LIBSVM, Matlab SVMtrain, or other

B) Run an SVM from a package or library of your choice on the Digits Dataset (Training data, labels. Testing data, labels). Use your extracted HAAR features from HW5. If you choose an SVM packge that does not provide multi-class implementations, you should write a wrapper that will make the code run for each class versus the others, separately.

PROBLEM 2 Implement your own SVM with SMO solver for Spambase [50 points]

Instead of using a SVM package, implement your own using SMO optimization and run on Spambase dataset with k-folds cross validation. Compare with Problem 1 results. Here is an SMO article, and a SMO simplified version .

PROBLEM 3 Implement your own SVM with SMO solver for Digit Dataset [70 points]

Instead of using a SVM package, implement your own using SMO optimization and run it on the Digits Dataset (Training data, labels. Testing data, labels) with HAAR features

extracted on HW5. Compare with Problem 1 results. The Digits dataset is very learnable, so to speed up the computation you can sample the training set at 10% or 20% per class (but make sure to use the entire testing set for measuring performance).

If you did not extract HAAR Features for HW5, you can use our version of

Since the data has a range of 10 labels (multiclass) while your SVM is a binary classifier, you will have to implement a wrapper on top of the SVM. You can choose one of the

following:

![]() One-vs-the rest approach and train 10 SVM classifiers (one per class)

One-vs-the rest approach and train 10 SVM classifiers (one per class)

· Run ECOC on top of SVMs (similar with HW5 setup, only with SVM instead of boosting)

· We suggest a voting schema: train all possible one-to-one SVM classifiers, for a total of (10 choose 2) = 45 models. Each one of these will train/test only on labeled data for the two particular classes is made for : for example 7vs9 SVM will only train/test on datapoints labeled 7 or 9. To obtain a multiclass classifier: first run (for a given test-datapoint) all 45 models and get their scores; then you would need a voting strategy in order to decide a prediction or a ranking among all 10 classes. Such voting strategy can be to predict the class with most wins, and if there is tie for the most wins to use the direct "match" one-to-one to break the tie.

PROBLEM 4 [GR only ]

Explain why 0 ≤ a ≤ C/mis a constraint in the dual optimization with slack variables. (HINT: read Chris Burges tutorial first) Distinguish three cases, and explain them in terms of the classification and constraints: a) 0 = a; b) 0 < a < C/m; c) a = C/m.

This has been discussed in class and in SVM notes; a detailed rigurous explanation is expected.

PROBLEM 5 k-Nearest Neighbors (kNN)

Implement the kNN classification algorithm such that it can work with different distance functions (or kernels as similarities)

A optional) Fix values of ‘k’= "the number of closest neighbors used",i.e. k=1,k=3, and

k=7.

![]() Spambase dataset. Try k=1,3,7 with Euclidian distance.

Spambase dataset. Try k=1,3,7 with Euclidian distance.

· Digits Dataset, with extracted features. Try k=1,3,7 and the following

similarities/distances: Cosine distance, Gaussian Kernel, Polynomial degree-2 Kernel

B optional) Fixed Window. Instead of using the closest k Neighbors, now we are going to

use all neighbors within a window. It should be clear that a testpoint z1 might have many

neighbors (adense area) in its window, while another testpoint z2 might have only (a sparse area) or none at all.

Fix an appropriate window size around the test datapoint by defining a windows "radius" R , or the maximum distance allowed. Predict the label as the majority or average of training neighbors within the window.

· Run on Spambase dataset with Euclidian distance.

· Run on Digits dataset with cosine distance.

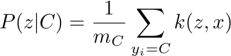

C required) Kernel density estimation. Separately for each label class, estimate P(z|C) using the density estimator given by the kernel K restricted to the training points form class C. There is no need for physical windows, as the kernel is weighting each point by similarity:

where mC is the number of points in the training set with label C. Then predict the class by largest Bayesian probabilities P(C|z) = P(C) * P(z|C) /P(z).

· Run on Spambase dataset with Gaussian kernel.

· (optional) Run on Digits dataset with Gaussian kernel

· (optional) Run on Digits dataset with polynomial kernel.

PROBLEM 6 [optional, no credit]

Consider the following 6 points in 2D, for two classes:

class 0: (1,1) (2,2) (2,0)

class 1: (0,0) (1,0) (0,1)

a) Plot these 6 points, construct the optimal hyperplane by inspection and intuition (give the W,b) and calculate the margin.

b) Which points are support vectors ?

c) [Extra Credit] Construct the hyperplane by solving the dual optimization problem using the Lagrangian. Compare with part (a).

PROBLEM 7 Implement better SMO [optional, no credit]

Extra points will be given for an SMO implementation for both PB2 and PB3 that is reasonable fast.

Extra points will be given for an implementation for both PB2 and PB3 that works with kernels (for example Gaussian Kernel)

PROBLEM 8 [optional, no credit]

Run your SMO-SVM on other datasets.

PROBLEM 9 [Extra Credit]

Same problem as 2, but dont use SMO. Instead use a built in (or existing library) quadratic solver from Matlab, Python, Java, C etc, inn order to solve the dual problem.

PROBLEM 10 [optional, no credit]

What is the VC dimension of the SVM with linear kernel?

2023-12-14

SVM and Kernels