CSCI 5521: Introduction to Machine Learning (Fall 2019)1

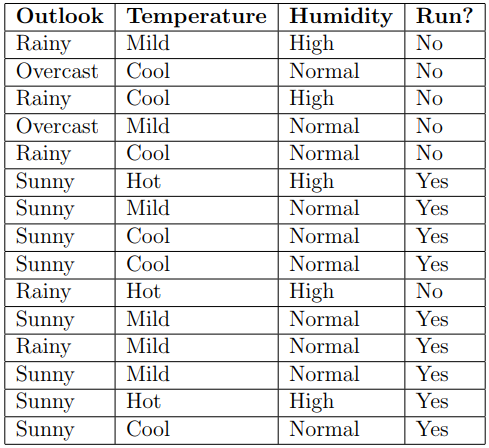

1. (30 points) Table 1 shows data collected on a runner’s decision to go for a run or not go for a run depending on the weather conditions that day. Answer the following questions:

(a) We wish to build a decision tree to help decide if the runner will go for run tomorrow. Draw the decision tree that fits this data and show how to calculate each node split using entropy as the impurity measure.

Note: If the entropy is the same for two or more features, you can select any of the features to split.

(b) Based on the decision tree, if tomorrow is Overcast, the temperature is Hot, and the Humidity level is Normal, will the runner go for a run?

2. (20 points) In the Candy Example in the lecture note (CSci5521 Bayesian Estimation.pdf, Page 6), we observe candies drawn from some bag: one cherry candy. Please answer the following questions:

(a) What kind of bag is it? Calculate the probability of each bag.

(b) What flavor will the next candy be? Calculate the probability of each flavor.

(c) We then observe a second candy drawn from the bag: one lime candy. Answer (a) and (b) again, now with the two observations: one cherry candy and one lime candy.

Note: Consider equations on Page 5

3. (50 points) In this programming exercise you will implement a Univariate Decision Tree for optical-digit classification. You will train your Decision Tree using the optdigits train.txt data, tune the minimum node entropy using optdigits valid.txt data, and test the prediction performance using the optdigits test.txt data. The minimum node entropy is used as a threshold to stop splitting the tree, and set the node as a leaf.

(a) Implement a Decision Tree with the minimum node entropy θ=0.01, 0.05, 0.1, 0.2, 0.4, 0.8, 1.0 and 2.0.

Report the training and validation error rates by θ. What θ should you use? Report the error rate on the test set using this this θ.

(b) What can you say about the model complexity of the Decision Tree, given the training and validation error rates? Briefly explain.

We provided a MATLAB template code, which you are required to use. Please make sure to follow exactly the same input/output parameters provided in each function. Failing to do so may result in points lost. Complete de following files:

• ReadData.m: Reads content from optdigits train.txt, optdigits valid.txt and optdigits test.txt.

• NodeEntropy.m: The function should find the node entropy, in order to decide when to stop splitting the tree.

• SplitEntropy.m: The function finds the split entropy, in order to decide what feature to be used to split.

• GenerateTree.m: Generate a decision tree. The function should be called to find the root node and then recursively for each branch.

• SplitAttribute.m: The function decides what is the feature to be used to split the tree.

• PredictWithTree.m: Makes prediction for a datapoint, using the learned tree.

• main.m: Main file that calls the appropriate functions.

More details about the inputs/outputs can be found in each source file.

Submission

• Things to submit:one PDF and one ZIP file:

1. hw4 sol.pdf: a document containing all your answers.

2. For Problem 3, submit a zip file containing All the source files required to run your code.

• All material must be submitted electronically via Canvas.

• Do not include the PDF file within the ZIP file. Rather, the PDF document should be submitted as a separate document. Failing to do so may result in points lost.

2019-12-02