DSCI 510 Final Project Homework 4

Hello, dear friend, you can consult us at any time if you have any questions, add WeChat: daixieit

DSCI 510 Final Project

The final project for this course is divided into three sections: Homework 3 (Already submitted), Homework 4 and Homework 5 (including presentation). This document will give you guidelines for Homework 4.

Homework 4 will be for 100 marks each. Note that grades of HW4 will be counted towards Homework along with HW1 and HW2. So, the combined grade for HW1, HW2, HW4 will be scaled down to 20% according to syllabus. Even though this homework is a part of the project, the grade for final project will be based on homework 5 and presentation which will be released later.

Homework 4:

Due Date: April 17, 2023 (You can use your extension days for this homework.)

Objectives:

a. Scraping of the data and show sample data.

b. Use the API to gather the data and show sample data.

c. Model data into a structured format such as ORM, SQL schema, Data Frame, CSV Files, etc. and create a diagram to show how the data joins or is related to each other.

d. Describe maintainability/extensibility of model.

You are going to be submitting a zipped folder (Firstname_Lastname_HW4) containing:

- Your .py script (it has to be a .py script and not a Jupyter notebook)

- Files containing the static data you retrieve from your APIs/scraping tasks (json, csv, relational databases, etc.)

- A simple diagram (drawing) showing what your script does and where it gets data from. This drawing can be a flowchart or a handmade scanned diagram.

- A .txt (README.txt) that explains how to run the code and the general extensibility (how could you extend it) and maintainability (how could it potentially break. For instance, your code might only be able to handle 10,000 rows of data and beyond this it might stop working). Your README.txt should also include the modules and packages that are required to be installed to run your code. Please mention the approximate time taken for different modes to run.

The script must run in 3 separate modes (from the command line):

Static Mode: This just opens the static datasets you create from your scraping/API requests and prints them. Static data can be stored in any desired format (e.g. : .csv, .json etc.). You need to submit your static dataset files in the zipped folder as a part of your submission. If your data is small, you can just print it all, if it is huge just print a few rows and maybe the dimensions of the entire dataset. It can be a csv, relational database (SQL), JSON, XML, etc. The person running a script will have to provide a path to the specific dataset(s) that they want to open and print. That’s why we have the path as a command line argument. It should run from the command line like:

>> python your_script.py --static

Scrape Mode: This performs the actual scraping and API requests but for a few select (5) elements. If your script requires input from a user, you can hard code a few sample inputs. For some of you with small datasets, this may be very similar to the next mode. This is so we don't have to look at the contents of your entire dataset. It should run from the command line like:

>> python your_script.py --scrape

Default mode: This mode performs all the functions from scraping data from web/API to creating the static dataset files to printing the data in the output. This mode scrapes all your data and run all the required API requests, creates the files in which you store the data and prints the data. You might want to figure out a nice way of printing this. We shouldn't have to look at thousands of elements bring printed. In that case, you might again want to show a sampling. It should run from the command line without any special arguments:

>> python your_script.py

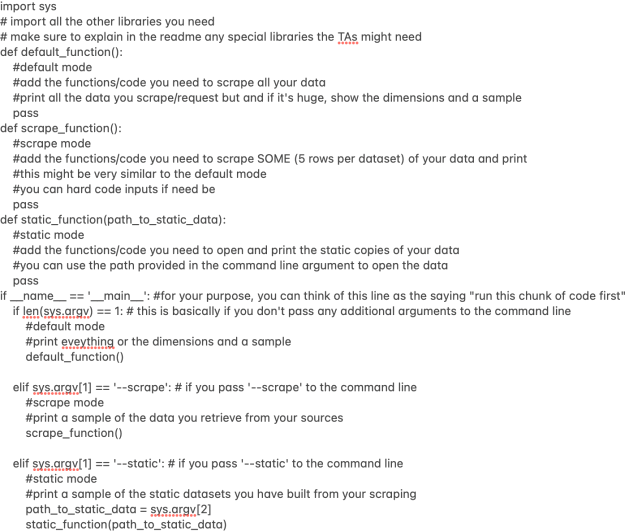

For your reference I have attached a screenshot of the sample code to demonstrate the above format. It is a simple example script to show what the general structure COULD look like. PLEASE NOTE, this is not the only way to go about this and you will obviously need to change things to make the logic work with the code you have.

Some important points to note:

- You are still allowed to make changes in your project and do alterations to your dataset.

- You are allowed to use more than one python files for the final submission but information about every python file, its output, command to run that python file in default, static and scrape mode, and that files functionality has to be clearly mentioned in README.txt

- Rubric for grading your project is as follows - Datasets: 20/20, Default mode: 20/20, Static mode: 20/20, Scrape mode: 20/20, Readme.txt: 10/10, Drawing: 5/5, Waiting Info/print

statements: 5/5. The waiting statements or the print statements are the ones that should be displayed on terminal as output (e.g. : ‘Waiting for dataset to load’, ‘This is my first dataset and it has n rows and m columns’ etc.)

- More about extensibility and maintainability : Extensibility means how you can further extend or generalize your code. How do you plan to extend the functionality of code. Maintainability means what are the points where your code can break (e.g. : your code can only deal with 5000 rows on data and not more than that. Sometimes the response from your URL can be bad (status: 400) so how do you deal with that. If you are using an API through which your data changes after every API call, then you need to mention it in maintainability.) We do not expect you to write big paragraphs on this. You can write about extensibility and maintainability in just 5-6 lines.

- I have uploaded my README.txt as a sample on Blackboard for reference.

- Path that is to be provided in static mode must be a single path. If you want to display three different datasets in static mode, you need to give relative path to a folder that contains all the three datasets. In my case, I was only displaying my final dataset that I got after combining all the three datasets, hence there was only one path to my .csv file in my README.txt

- It is not necessary for you to combine the three datasets. You can keep them separate but while doing analysis you need to use data from all three of them.

In terms of grading, we don't have a specific rubric beyond the requirements we have specified in the instructions. My thoughts on the grading that we will try to run the code in the three different modes and if it does roughly what we require, you'll get full credit. The only case where you will lose points is if we try and run your script and it immediately throws an error, you are outputting something completely unrelated to the project, or you submit the wrong type of files (Jupyter notebooks, text files, etc.). We don't have time to debug every single person's code so please please please test your code before submitting it. To be doubly sure, try running the code on someone else's machine to make sure it works.

2023-04-14